In today’s fast-paced digital age, businesses, both big and small, rely heavily on online visibility. The coveted top spots on search engine result pages (SERPs) can make a significant difference in user traffic, brand credibility, and ultimately, revenue. But there’s a behind-the-scenes player that can heavily influence your website’s SEO health: the Crawl Budget. Understanding and optimizing it can make the difference between search engine success and digital obscurity.

What’s Crawl Budget in Simple Terms

Before diving into the nitty-gritty of crawl budgets, let’s step back and understand search engine crawling. In essence, search engines like Google use “bots” or “spiders” to “crawl” webpages.

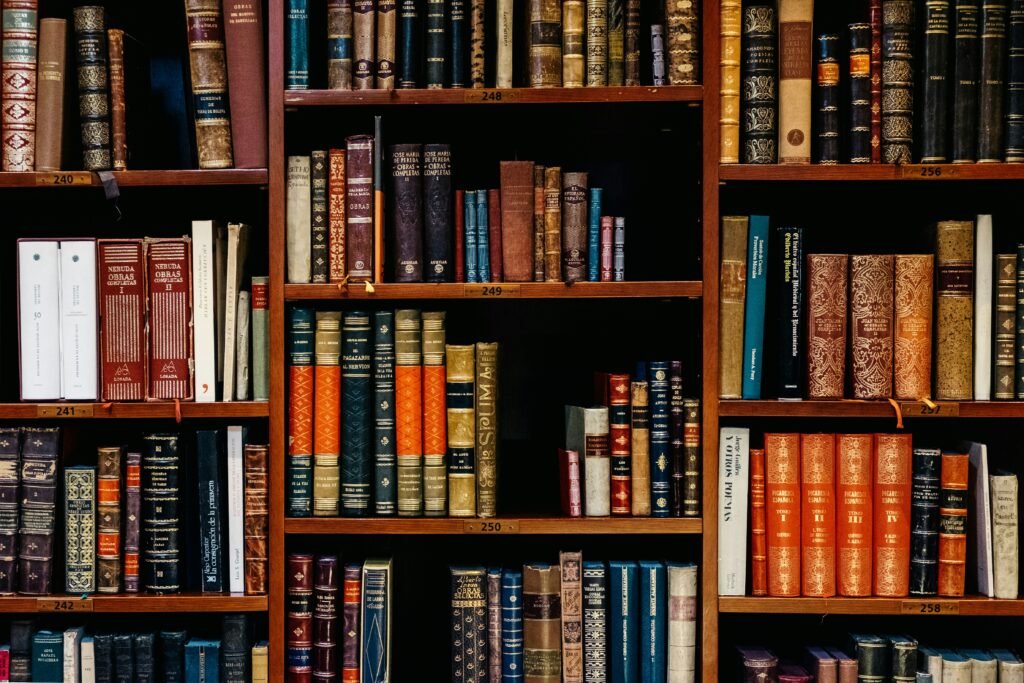

These bots visit and scan pages, collecting data, which is then indexed and used to serve search results to users. Think of them as digital librarians collecting books (web pages) to add to the vast online library (search engine).

Now, these digital librarians don’t have unlimited time. The ‘Crawl Budget’ refers to the number of pages a search engine bot will crawl on your site within a given timeframe. It comprises two main components:

- Crawl Rate: How many requests per second a bot makes while crawling a site. This ensures the bot doesn’t overload your server.

- Crawl Demand: Determined by the site’s popularity and how often content gets updated.

Real-life analogy

Imagine you’re at a buffet. The variety of dishes (web pages) is overwhelming. You have limited time and appetite (Crawl Budget). So, you prioritize what dishes (pages) you want to try based on your preferences (site’s popularity, freshness of content). This selective tasting is much like how search engine bots operate with a crawl budget.

Factors That Influence Crawl Budget

The efficiency with which search engines index a website, especially large sites with thousands of pages, hinges on the crawl budget. Crawl budget optimization ensures that the most important pages on your site are discovered and indexed timely. Let’s delve deeper into the critical factors that play a substantial role in determining your site’s crawl budget.

Website Server Health

The health and responsiveness of your website server is foundational. When search engine bots, such as Googlebot, attempt to crawl a website, they require swift server responses. Slow or unresponsive servers can limit the number of pages that the bot can crawl within its allocated time, thereby shrinking your effective crawl budget.

Factors affecting server health include:

- Server Speed: If your server takes too long to respond, bots might crawl fewer pages to avoid overloading the server.

- Uptime/Downtime: Regular server downtimes, even if they are short-lived, can dissuade bots from crawling often.

- Server Location and Hosting: Quality hosting solutions and content delivery networks (CDN) can ensure that bots from different regions access your site efficiently.

For a startup founder, investing in premium hosting with a reliable uptime guarantee is a non-negotiable first step. A small period of downtime or slow server response can mean significant pages being left uncrawled, which could be the difference between being found online and obscurity.

Website’s Internal Linking Structure

Internal linking is akin to the road network of a city. Efficient and logical interlinking ensures that search engine bots can find, crawl, and index all your content without unnecessary detours.

- Depth of Pages: Pages buried deep within your site, requiring many clicks to access, are less likely to be crawled frequently.

- Orphan Pages: Without any internal links pointing to them, these pages often remain undiscovered by search bots.

- Consistent Linking: Regularly linked-to pages signal their importance to search bots, increasing the likelihood of frequent crawls.

By crafting an intentional and comprehensive internal linking strategy, businesses ensure that all of their valuable content remains within easy reach of search engine bots.

Site Updates Frequency

Search engines aim to deliver the most recent and relevant information. A frequently updated website signals that new or refreshed content awaits the bots.

Fresh Content: Regularly adding new content prompts bots to crawl your site more often.

Content Modifications: Even minor updates to important pages can invite search bots.

Deletions and Additions: When pages are added or removed, your internal linking structure changes, potentially affecting crawl frequency.

Thus, startups and businesses should maintain a consistent content update schedule, ensuring search engines recognize the site’s dynamism and relevance.

Hurdles like Site Errors

Site errors can drastically curtail the number of pages a search bot crawls. Whether it’s a ‘404 Page Not Found’ error or issues with the robots.txt file, such obstacles waste the bot’s time and can reduce your effective crawl budget.

404 Errors: They waste the bot’s time as there’s nothing to index on these pages.

Soft 404s: These are pages that server might serve even if the content isn’t available, misleading bots.

Robots.txt Disallows: Incorrectly disallowing essential directories can mean vital content remains uncrawled.

By proactively monitoring for and rectifying site errors, businesses can ensure that their crawl budget is utilized optimally.

Why Crawl Budget is Important

In the vast ocean of the internet, websites vie for visibility, and standing out is no mean feat. It’s not just about designing an attractive site or having stellar content. Underneath these layers, a game of chess plays out between websites and search engine crawlers. Here, the term “crawl budget” is paramount. Let’s dive into why it holds such significance.

Ensuring all essential pages get crawled and indexed

For any organization, visibility is key. Imagine spending significant resources on a new service page, a blog post targeting a particular audience, or a landing page for a new product, only to realize that it’s invisible to search engine users. This is where crawl budget steps in.

- Valuable Content Discovery: Search engine bots are finite entities. They won’t crawl every single page of every site daily. A well-optimized crawl budget ensures that your most crucial pages are frequently visited and updated in search engine databases.

- Rapid Content Updates: In sectors like news or e-commerce, where content is updated multiple times a day, it’s paramount for these changes to reflect in search results quickly. A healthy crawl budget ensures your audience gets the latest, most relevant information.

- Competitive Edge: In industries where multiple players offer similar services or products, having your latest offerings and pages indexed rapidly can give you a competitive advantage.

To put it succinctly for a startup founder: it’s like ensuring every product in a vast store gets its moment in the storefront window.

Importance for websites with thousands of pages

Large websites, especially e-commerce platforms, news sites, or forums, present unique challenges.

- Frequent Updates: Large e-commerce sites introduce new products regularly. News sites have hourly updates. Ensuring all these pages get the attention they deserve from search engine bots becomes a herculean task without optimizing the crawl budget.

- User Engagement: If a user hears about a new product but can’t find it on their trusted search engine, their next step might be to visit a competitor’s site. Every page that’s not indexed is a potential lost engagement or sale.

- Resource Management: For vast sites, server resources are a concern. Ensuring bots efficiently crawl without overloading servers requires a delicate balance, achieved through effective crawl budget management.

Think of managing crawl budgets as optimizing supply chain logistics. Every product (page) needs to reach its intended audience (get indexed), and the supply chain (crawl budget) ensures this happens seamlessly.

Impact on SERPs and user experience

User experience doesn’t just start when a user clicks on your website. It begins with their search query.

Relevancy: If your site’s latest content isn’t indexed, users might land on outdated pages. This can lead to decreased satisfaction, affecting bounce rates and conversions.

Authority: Regularly updated content, when indexed promptly, positions your site as an authority. Over time, this boosts trust, which can lead to higher click-through rates and user engagement.

Site Performance: Bots crawling non-essential or error pages can strain server resources. Optimizing the crawl budget ensures bots focus on relevant pages, leading to faster load times and enhanced user experience.

For decision-makers, consider the SERP as the first touchpoint in the user’s journey. Ensuring they see the most relevant, recent content from your site can set the tone for their entire interaction.

Common Issues that Waste Crawl Budget

Navigating the intricacies of the digital realm can often feel like captaining a ship through uncharted waters. While setting a course for SEO success, you might unknowingly hit icebergs that eat away at your crawl budget. By understanding and addressing these issues, you can steer clear of potential pitfalls and keep your website sailing smoothly toward optimal visibility.

Duplicate Content Issues

In the context of SEO, duplicate content is like déjà vu—a situation where multiple pages on your website present identical or strikingly similar content.

Why it’s a Concern: Search engine bots, when encountering duplicate pages, might struggle to decipher which version to index. This leads to wasted crawl budget as bots inefficiently navigate these redundancies.

Solutions: Using canonical tags can be a lifesaver. They signal to search bots which version of the content is the ‘master‘ or preferred one, ensuring they don’t waste time on duplicate pages. Another approach is to consistently create unique and value-driven content for each page.

On-site Errors like 404s

404 errors, commonly known as “Page Not Found” errors, act as dead ends for both users and search engine bots.

Why it’s a Concern: When bots encounter 404s, it disrupts their crawling pattern. Each error consumes part of the crawl budget, reducing the efficiency of the indexing process.

Solutions: Regularly monitor your site for broken links and swiftly address any found. Implementing 301 redirects from the broken page to a relevant active page can also be a robust strategy.

Poor Site Architecture

Think of site architecture as the foundation of a building. A weak foundation can compromise the entire structure.

Why it’s a Concern: If your site’s architecture is convoluted, search bots might face difficulties navigating and indexing pages. This can lead to essential pages being overlooked.

Solutions: Adopting a flat site structure, where important pages are just a few clicks away from the homepage, is ideal. Clear, logical internal linking also aids bots in efficiently crawling the site.

Redirect Chains and Loops

Redirect chains occur when a URL redirects to another, which in turn redirects to yet another, and so on. Loops are when a URL ends up redirecting back to itself through a series of redirects.

Why it’s a Concern: Both situations can confuse and trap search engine bots, wasting precious crawl budget and potentially preventing proper indexing.

Solutions: Regularly audit your redirects. Ensure that they’re direct, minimizing the number of hops. For instance, if Page A redirects to Page B, which redirects to Page C, it’s more efficient to have Page A redirect straight to Page C.

Strategies for Optimizing Crawl Budget

Navigating the world of SEO can be akin to captaining a ship through rough seas. While understanding your terrain (or in this case, the digital landscape) is critical, so too is equipping your vessel with the best tools and strategies to navigate safely and efficiently. This is where optimizing your website’s crawl budget comes into play.

Enhancing Site Speed and Server Response

A slow-loading website can be detrimental not just to user experience, but also to your crawl budget. Search engine bots have limited time and resources; hence, a faster site ensures more pages get crawled.

- Optimizing Images: Large image files can be the main culprits. Tools like ImageOptim or TinyPNG can reduce file sizes without compromising quality.

- Leverage Browser Caching: This reduces the load time for returning visitors, ensuring bots don’t waste time re-crawling unchanged content.

- Use Content Delivery Networks (CDN): CDNs like Cloudflare or Akamai spread your site content across a network of servers, ensuring users (and bots) access your site from a server near them, speeding up load times.

Proper Utilization of Robots.txt

Think of robots.txt as the gatekeeper. It tells search bots which parts of your website to crawl and which to avoid.

Disallow Unimportant Pages: Keep search engines focused on your most important pages. For instance, internal search results or admin pages rarely offer value to users from a search perspective and can be excluded.

Stay Updated: As your site grows and evolves, so should your robots.txt file. Regularly revisit and modify it to align with your current site structure and goals.

Tackling Duplicate Content Using Canonical Tags

Duplicate content can significantly drain your crawl budget. Search engines can waste time crawling multiple versions of the same content. Canonical tags signal to search engines which version of a page they should consider as the “original” and prioritize for indexing.

Audit Your Site: Use tools like Screaming Frog to identify duplicate content.

Implement Canonical Tags: Once identified, set the preferred version using the canonical tag. This way, even if bots come across duplicates, they know which one to prioritize.

Cleaning up Error Pages and Handling Redirects Efficiently

Error pages and redirects can act like roadblocks, diverting or even halting the journey of search bots through your site.

Monitor 404 Errors: Regularly check for broken links and rectify them. Tools like Ahrefs can be particularly useful for this.

Limit Redirect Chains: The fewer steps a bot has to take to reach a page, the better. Keep your redirect chains short and direct.

Implementing an Efficient XML Sitemap

A sitemap acts like a map for search bots, guiding them through the most important pages on your site.

Stay Updated: Ensure your sitemap is regularly updated to reflect new content.

Prioritize Important Pages: Highlight priority pages in your sitemap to ensure they get indexed faster.

Advanced Tactics for Large Websites

Large websites, particularly e-commerce sites and digital publications, often house millions of pages. Such vast virtual expanses require advanced tactics to ensure the most critical pages are frequently crawled.

Using log file analysis for insights on bot activity

Log files provide granular data about every request made to your server. By analyzing log files, you can discern how search engine bots interact with your site. It can unveil vital insights like:

- Which pages are frequently crawled?

- Are there any areas of the site bots are overlooking?

- Is the bot encountering errors?

Understanding and acting on this information can be instrumental in directing the crawl budget where it’s needed most.

Paginating content correctly

For websites housing extensive content, like online catalogues or archives, pagination becomes essential. Proper pagination ensures that bots can crawl through long lists without getting trapped or missing content. Using the rel="next" and rel="prev" tags can guide search engines through paginated series efficiently.

Prioritizing high-value pages for crawling

All pages on a website are not of equal importance. Some pages, like new product listings or recently updated articles, might be more critical. Leveraging the XML sitemap and robots.txt file can help guide search engine bots to these high-priority areas.

Leveraging Cloud platforms and CDNs for optimal server performance

A site’s responsiveness impacts crawl budget. Utilizing cloud platforms or Content Delivery Networks (CDNs) can bolster server performance, making your website more responsive to search engine bots, hence optimizing the crawl budget.

Measuring and Monitoring Crawl Budget

In the world of business, a common adage reminds us, “You can’t manage what you can’t measure.” This rings true for SEO as well. Monitoring your website’s interaction with search engine bots gives you the insights needed to make informed decisions, ensuring that your SEO strategy remains on track.

Google Search Console Insights to keep track of crawling

Imagine setting out on an expedition without a map or compass. It’s a perilous journey, right? Similarly, venturing into SEO without the right tools can lead to unnecessary pitfalls.

GSC stands as an indispensable tool for any website owner. It’s akin to a health report card, detailing how Google perceives your site.

Coverage Report:

Gives an overview of indexed pages and highlights any issues Google may have encountered during crawling.

Performance Report:

Measures how well your site is doing in search results, including click-through rates and impressions.

Crawl Stats Report:

This is the compass for understanding crawl budget. It displays Googlebot’s activity on your site over the last 90 days—how many requests it made, what it downloaded, and any problems it bumped into.

Interpreting Crawl Stats and Addressing Anomalies

Numbers and stats are just data unless you can interpret them and derive actionable insights.

Identifying Patterns: Recognizing how often Googlebot crawls your site, and which pages it frequents, can shed light on the health of your crawl budget. A sudden drop in crawl frequency might indicate an issue that needs addressing.

Anomalies and Red Flags: Dramatic spikes in server errors, a growing number of blocked URLs, or an unexpected decline in indexed pages are all red flags. Addressing these promptly can mean the difference between SEO success and obscurity.

Balancing Crawl Rate with Server Load: If Googlebot is overloading your server, it might be wise to adjust the crawl rate in GSC. But be cautious—restricting the bot too much can lead to infrequent indexing.

The Future: How Evolving Algorithms Might Impact Crawl Budget

As the digital landscape evolves, so too does the world of search engines. With advancements in artificial intelligence, machine learning, and user behavior understanding, search engines are constantly refining the way they perceive and analyze web content. This progression has profound implications for SEO professionals and website owners concerning crawl budgets.

The Increasing Intelligence of Search Engine Bots: What to Optimize for?

The very nature of search engine bots is transitioning from rule-based systems to more dynamic, learning-oriented entities. Here’s how this shift impacts crawl budgets:

- Contextual Understanding: With the infusion of AI, search engine bots can better understand content context. This means they may prioritize crawling pages that offer unique value over ones that seem repetitive or derivative, even if the latter are technically “new.”

- Predictive Crawling: Leveraging machine learning, bots might soon be able to predict when a site will publish new content or undergo updates. This foresight can aid in better allocating crawl budgets.

- User Experience (UX) Prioritization: As bots become smarter, they can identify which pages provide a superior UX. Pages with faster load times, mobile optimization, and high user engagement could see more frequent crawls.

For entrepreneurs and top-tier executives, understanding this transformation is crucial. It’s not just about having a vast amount of content; it’s about ensuring the content is valuable, timely, and offers an unparalleled user experience.

What About Crawl Budget in the Context of AI-Driven Search?

As artificial intelligence becomes an integral part of search, it’s going to shape the fundamentals of crawl budget allocation:

Dynamic Crawl Budget Allocation: AI could enable search engines to allocate crawl budgets dynamically, depending on a website’s perceived importance, update frequency, and user engagement metrics.

Feedback Loops: With AI’s ability to process vast amounts of data quickly, search engines might implement feedback loops, where the bot’s crawling behavior is continuously adjusted based on recent site changes and user interaction data.

Personalized Search Results: As search engines lean more into personalization, AI might direct bots to frequently crawl pages that cater to trending user queries or interests. This could mean a diverse range of pages across the web might witness fluctuating crawl frequencies based on shifting global or regional trends.

For decision-makers in the digital realm, this emphasizes the importance of being adaptive. Sticking to old practices might not just be unproductive but detrimental. Embracing change, understanding AI-driven search nuances, and molding strategies accordingly should be the way forward.

Wrapping it up

Crawl budget optimization is pivotal for maximizing a website’s search potential. As web spaces grow and search algorithms evolve, understanding and adeptly managing crawl budgets becomes even more crucial.

Regular monitoring, coupled with strategic adjustments, ensures optimal content visibility. Staying informed and proactive can make the difference between online obscurity and digital prominence.

Read Next:

- How to Conduct a Website SEO Audit: The Complete Guide

- 21+ Ways Chatbots can Skyrocket Lead Generation and Conversions

- How CRM can Skyrocket your Customer Retention! (and Boost LTV)

- How to Use Popup Maker: An Explainer

- Key Elements of a Website Development Agreement: An International Perspective

Comments are closed.